When exploring the artificial neural networks-based latest concepts in the field of machine learning and to overcome difficult, large and past intricate issues, it is important to concentrate on modern algorithms, new frameworks and these network’s applications. phdtopic.com serves as an ultimate destination to share Advanced Topics in Artificial Neural Networks in Machine Learning. We have been working on machine learning for part 13+ years and have achieved huge milestones. No matter in any area of Artificial Neural Networks we will share you the original topic with a brief discussion.

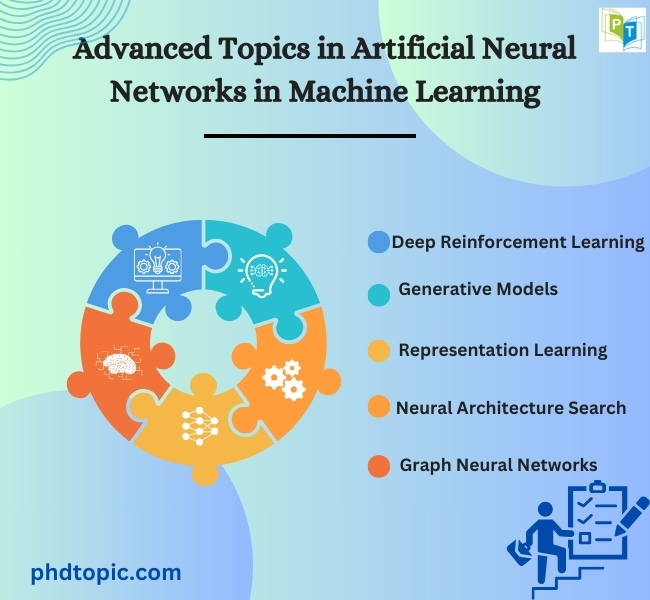

Below we discuss about various latest concepts that signifies the advancements of application and research in the specified domain:

- Deep Reinforcement Learning (DRL):

- Hierarchical Reinforcement Learning: Our approach learns to breakdown complicated tasks into order of subtasks.

- Off-Policy Reinforcement Learning: For enhancing sample effectiveness, this technique learns about one policy when dealing with another policy.

- Multi-agent Reinforcement Learning: In our framework, various agents are learning against one another or learning cooperatively.

- Representation Learning:

- Disentangled Representation Learning: This learning representation assists us to divide the basic aspects of differentiations in data.

- Contrastive Learning: Contrastive learning where the methods learn by differentiating positive pairs contrary to negative pairs in an embedding area.

- Neural Architecture Search (NAS):

- Efficient NAS: For finding the wide area of potential neural network frameworks, we create more robust techniques.

- Transferable Architectures: The frameworks that are shared among various fields or tasks are searched in our approach.

- Meta-Learning:

- Learning to Learn: Depending on previous activities, our techniques adapt their learning procedures.

- Quick Adaptation: Quick adaptation means the methods enable neural networks to rapidly adjust to novel tasks with limited instances.

- Neural Ordinary Differential Equations (ODEs):

- Neural SDEs: For modeling variability, we provide the neural ODEs to stochastic differential equations.

- ODE2VAE: To learn potential variations, our work integrates Variational encoders with ODEs.

- Neural Network Quantization & Pruning:

- Binary & Ternary Networks: In this, we explore into networks with highly small bit-width weights and activations.

- Structured Pruning: Our model prunes the networks in a way that fits with hardware capabilities.

- Domain Adaptation & Transfer Learning:

- Unsupervised Domain Adaptation: For sharing skills from a labeled source field to an unlabeled destination field, this method is very useful for us.

- Cross-Domain Few-Shot Learning: With only limited instances, our approach learning to generalize throughout fields.

- Learning with Limited or Noisy Data:

- Weakly Supervised Learning: From noisy or a small amount of labels, our technique learns.

- Robust Learning: We create frameworks that learn from adversarial or modified data.

- Generative Models:

- Diffusion Models: In creating high-standard images, our generative model groups offer impressive outcomes.

- Energy-Based Models (EBMs): This approach learning and sampling from energy-based performance to create novel data points.

- Implicit Generative Models: We retrain generative models that describe a stochastic procedure without manageable risks.

- Self-Supervised Learning:

- Bootstrap Your Own latent (BYOL): For self-supervised image presentation learning, our new technique is very supportive.

- Masked Autoencoders: Autoencoding fundamentals without exact redevelopment help us to learn representations.

- Graph Neural Networks (GNNs):

- Dynamic Graph Neural Networks: GNNs is very helpful for us to manage graphs that transform periodically.

- Graph Attention Networks: To learn more varied node connections, we employ attention mechanisms.

- Adversarial Machine Learning:

- Adversarial Robustness: In this, we enhance the defense nature of neural networks to adversarial assaults.

- Adversarial Instances in the Physical World: Our approach offers the interpretation of adversarial assaults over the digital system.

- Understandability & Explainability:

- Post-hoc Understandability: To understand the trained frameworks without modifying their design, our project intends to create techniques.

- Intrinsic understandability: We develop frameworks that are intrinsically understandable by structure.

- Advanced Sequence modeling:

- Transformers for Sequential Data: Our project aims to utilize and alter transformer frameworks for several sequence designing tasks over language.

- Temporal Convolutional Networks (TCNs): For designing sequences with memory, our work investigates contrast methods to RNNs.

- Multi-Modal & Cross-Modal Learning:

- Multi-Modal Fusion: To efficiently integrate information from different sensory data, we build techniques.

- Cross-modal Hashing: This is about learning tiny structures for extraction among various data types (example: image and text).

The development in these concepts not only depends on the theoretical innovations but also on improvements in data accessibility and hardware. These fields are built for research and are intended to generate practical enhancements that impact the broad range of machine learning applications.

Neural Networks Research Topics & Ideas

The best project ideas and titles with problem that we have identified and in what methods we are going to get the expected result will be shared. We work on the expected result on the trending Neural Networks Research Topics & Ideas that we have provided with and we make sure that you receive the best research support.

- Identification of Nonlinear Systems With Unknown Time Delay Based on Time-Delay Neural Networks

- Forecasting stock index increments using neural networks with trust region methods

- Chaotic wandering and its sensitivity to external input in a chaotic neural network

- New Delay-Dependent Stability Criteria for Neural Networks With Time-Varying Delay

- Stability and Dissipativity Analysis of Distributed Delay Cellular Neural Networks

- A New Criterion of Delay-Dependent Asymptotic Stability for Hopfield Neural Networks With Time Delay

- Complete Delay-Decomposing Approach to Asymptotic Stability for Neural Networks With Time-Varying Delays

- On Stabilization of Stochastic Cohen-Grossberg Neural Networks With Mode-Dependent Mixed Time-Delays and Markovian Switching

- Global Robust Stability Criteria for Interval Delayed Full-Range Cellular Neural Networks

- Local Stability Analysis of Discrete-Time, Continuous-State, Complex-Valued Recurrent Neural Networks With Inner State Feedback

- Comparative analysis of artificial neural network models: application in bankruptcy prediction

- The research on the fault diagnosis for boiler system based on fuzzy neural network

- An efficient learning algorithm with second-order convergence for multilayer neural networks

- Quantum neural networks versus conventional feedforward neural networks: an experimental study

- Improved generalization ability using constrained neural network architectures

- Novel Predictive Analyzer for the Intrusion Detection in Student Interactive Systems using Convolutional Neural Network algorithm over Artificial Neural Network Algorithm

- Memory capacity bound and threshold optimization in recurrent neural network with variable hysteresis threshold

- Synchronization of Memristor-Based Coupling Recurrent Neural Networks With Time-Varying Delays and Impulses

- Optimal solutions of selected cellular neural network applications by the hardware annealing method

- Differential equations accompanying neural networks and solvable nonlinear learning machines