In machine learning based projects, metrics are utilized to examine and determine our framework’s efficiency. Based on the problem categories (like classification, clustering, or regression) and the project goal, we select the appropriate metrics. We carry out the work as peer your tailored requirement. Here is a description of various machine learning based metrics for different kinds of tasks:

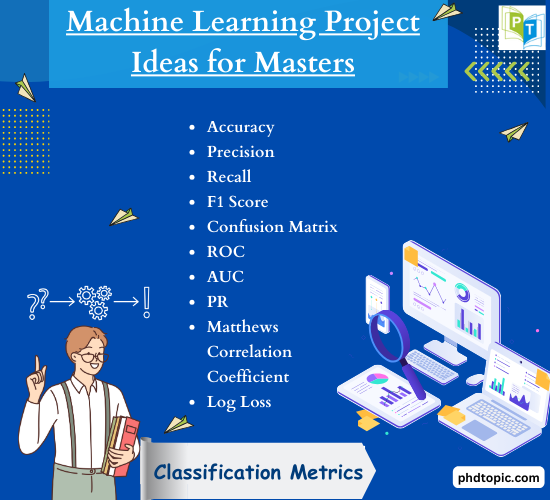

Classification Metrics:

- Accuracy: From the all evaluated cases, this metric assists us to measure the genuine outcomes alone.

- Precision: We consider this to calculate the ratio of genuine positive forecasting to the total forecasted positives.

- Recall (Sensitivity): It helps us to determine the ratio of true positive forecasting to entire real positive cases.

- F1-Score: Our task utilizes this to calculate the harmonic mean of precision and recall.

- Confusion Matrix: It is the table representation displaying the cases of true negatives, true positives, false negatives and false positives for us.

- ROC (Receiver Operating Characteristics) Curve: It is the representation of a graph displaying the efficiency of our classification framework at all classification thresholds.

- AUC (Area Under the ROC Curve): By using this AUC, we explain the ROC curve in a single value.

- PR (Precision-Recall) Curve: Our project represents this graph for displaying the tradeoff among precision and recall for various thresholds.

- Matthews Correlation Coefficient (MCC): Based on false and true positives and negatives, a coefficient denotes the standard of binary categorization.

- Log Loss (Logarithmic Loss): This estimates the efficiency of our classifier where the forecasted outcome is a probability value among 0 and 1.

Regression Metrics:

- Mean Absolute Error (MAE): It helps us to find out the mean of the absolute variations among forecasted and real values.

- Mean Squared Error (MSE): We utilize this to determine the mean value of the squared variations among forecasted and real values.

- Root Mean Squared Error (RMSE): To obtain the square root of the MSE, we use this metric.

- Root Mean Squared Logarithmic Error (RMSLE): It is the same as the RMSE. The only difference is, we use the log of forecasted and real values.

- R-Squared (Coefficient of Determination): Our task uses this metric that denotes the proportion of variance in the dependent attribute that is anticipated from the independent attributes.

- Adjusted R-Squared: It is the alteration of the R-Squared formula to represent the number of attributes in our framework.

- Mean Squared Logarithmic Error (MSLE): It is similar to RMSLE metrics but without performing the root function.

- Mean Bias Deviation (MBD): This metric denotes the mean bias in our forecasting.

Clustering Metrics:

- Silhouette Coefficient: Our research employs this metric to determine how identical an object is to its own groups compared to other groups.

- Davies-Bouldin Index: This metrics indicates the average similarity calculation of each group with its most identical group.

- Calinski-Harabasz Index: We calculate the ratio of the total of between-cluster distribution and of within-cluster distribution of all clusters.

Ranking Metrics:

- Mean Reciprocal Rank (MRR): Our work uses this metric to evaluate the mean of the reciprocal ranks of the genuine results between all the forecasted results.

- Normalized Discounted Cumulative Gain (nDCG): Depending on the graded importance of forecasted items, we calculate the efficiency of a ranking model.

- Average Precision at k (AP@k): We determine the precision at a static cutoff rank, examining only the high ‘k’ forecastings.

Time Series Metrics:

- MAPE (Mean Absolute Percentage Error): This metric assists us to calculate the dimension of error in percentage factors.

- SMAPE (Symmetric Mean Absolute Percentage Error): Our task utilizes this metric that is the same as the MAPE but a symmetric one and examines both the forecasted and real values in the denominator.

Other Metrics:

- Cohen’s Kappa: In our work, we consider this to estimate the agreement among two raters who each categorize items into common specific categories.

- Gini Coefficient: It is the determination of statistical distribution aimed to indicate the wealth or income dispersions of inhabitants.

It is very essential to interpret the metrics’ abilities and challenges while utilizing it. For instance: when dealing with class imbalance, considering accuracy metrics is not an effective one, therefore, utilization of precision, recall and F1-score are more detailed in this case. In regression tasks, RMSE metric provides greater fine to more mistakes. We conclude that selection of metrics must be suitable for our project idea and the data nature.

MS THESIS in Machine Learning

To achieve your mentioned goal good thesis is required to uplift your research work. Have a look at the latest thesis topics in ML which we have framed .

- Saga: An Open-Source Platform for Training Machine Learning Models and Community-driven Sharing of Techniques

- Classification of Minority Attacks using Machine Learning

- DNA Microarray Analysis Using Machine Learning to Recognize Cell Cycle Regulated Genes

- Auto-SCMA: Learning Codebook for Sparse Code Multiple Access using Machine Learning

- High Performance Machine Learning Models for Functional Verification of Hardware Designs

- Adaptive Positioning System Design Using AR Markers and Machine Learning for Mobile Robot

- Analysis of Data Sets With Learning Conflicts for Machine Learning

- Analysis of Machine Learning for Recognizing Hand Gestures

- Integrating machine learning concepts into undergraduate classes

- Exploring Edge Machine Learning-based Stress Prediction using Wearable Devices

- A comparative study on machine learning algorithms for green context-aware intelligent transportation systems

- Enhancing indoor positioning based on partitioning cascade machine learning models

- A distributed computing framework based on lightweight variance reduction method to accelerate machine learning training on blockchain

- Indirect PCA Dimensionality Reduction Based Machine Learning Algorithms for Power System Transient Stability Assessment

- Cloud Detection and Classification for S-NPP FSR CRIS Data Using Supervised Machine Learning

- Machine-Learning-Based Classification of GPS Signal Reception Conditions Using a Dual-Polarized Antenna in Urban Areas

- Enhancing Distributed Data Integrity Verification Scheme in Cloud Environment Using Machine Learning Approach

- Automated Early Prediction of Employee Attrition in Industry Using Machine Learning Algorithms

- Feasibility Study on Machine Learning-based Method for Determining Self-and Mutual Inductance

- Power Quality Improvement and Fault Diagnosis of PV System By Machine Learning Techniques